Degrees of Freedom:

The degrees of freedom of a set of observations are the number

of values which could be assigned arbitrarily within the

specification of the system. For example, in a sample of size

n grouped into k intervals, there are k-1 degrees of freedom,

because k-1 frequencies are specified while the other one is

specified by the total size n. In some circumstances the term

degrees of freedom is used to denote the number of independent

comparisons which can be made between the members of a sample.

Standard Deviations:

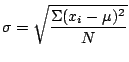

There are two types of standard deviations. The standard deviation

of the population , denoted by ![]() , is defined as

, is defined as

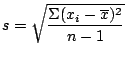

Variance:

The square of the population standard deviation, ie

![]() . The square of the sample standard deviation (

. The square of the sample standard deviation (

![]() ) is termed the sample estimate of the population variance.

) is termed the sample estimate of the population variance.

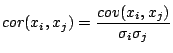

Covariance Matrix

Given ![]() sets of variates written as

sets of variates written as

![]() (such as

(such as

![]() molecules each described by

molecules each described by ![]() descriptors) then the first

order covariance matrix is defined by

descriptors) then the first

order covariance matrix is defined by

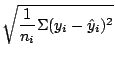

Root Mean Square Error: The individiual errors are squared, added, divided by the number of errors (ie, the number of observations) and the square rooted. It summarizes the overall error.